Coursera is a great site which offers online courses covering a large range of topics such as engineering, data science, machine learning, and mathematics. Some are grouped into specializations, and even full-on degrees from universities such as Imperial College London. While you can browse these courses and filter on areas which matter to you, I wondered if analysing the stats on all of them at once could reveal any particular courses that stand out among the crowd.

In this post I describe how I went about collecting this data, and how I then analysed it, both of which I used Python for. While I am focusing on Coursera data, it can be used as a high level guide for approaching data scraping the web as a whole. All of the code mentioned in this post is available on my GitHub.

Investigating Where the Data Comes From

The first step for any data scraping task will involve looking at the page which has data we want to scrape. In this case, the best place to start would be a page which loads a lot of courses so we can see how the site itself gathers that data. A page such as https://www.coursera.org/browse is the natural place to begin.

From here we look at how this data is being populated on the page, because we’ll want to reproduce this method in our data scraping if possible. The order in which we look is the order in which data scraping is most convinient, which is the following:

API Requests - Open up the developer tools and look at the network requests being made and then filter by “XHR” requests only. Is there anything which returns the data we want to scrape? Look for network requests which return a json or XML, which is normally the output choice for this type of API call.

DOM Variables - Type “window” in the console to view all of the JavaScript variables created on the page, some of which might be used for storing data, such as a dataLayer used for Analytics tracking. Executing some JavaScript to read all of the data within this object is simple.

Page HTML - This approach won’t let you down, but its ease of access comes down to how the data you’re after is laid out on the page, and if it has a readable class/id naming convention for its HTML elements. Using JQuery or a HTML parser to return all values with certain element id/class values would be required if so.

The course browse page we started on makes a request to an “algolia.net” which returns a JSON with some relevant data about the courses on the page, so it’s the API approach for us, what luck. This request goes to a “queries” page and has query parameters that must influence the results returned, including the API which will let us actually return anything.

While I don’t know what exactly Aloglia is, what I do know is that they have great documentation for their RESTful API which will come in useful later. Browsing around other pages of Coursera and looking at what other Algolia requests are being made, I find a great URL which returns all courses available:

Don’t let the index name fool you, these aren’t test products, these are actual live courses on Coursera. Referring back to the Algolia documentation I found, we can return more courses with the hitsPerPage parameter, and look at other pages with the page parameter. The problem with this data though is that it’s incomplete, as we also want to know information about Specializations (which consists of several individual courses we have now) and how much these all cost too.

The Algolia requests weren’t the only API requests being made, there were in fact calls being made to a “coursera.org/api/” as well (bit of a giveaway that, and I will admit I investigated this first). These two requests with appropriate query parameters will return Specialization and Price data.

- https://www.coursera.org/api/onDemandSpecializations.v1

- https://www.coursera.org/api/productPrices.v3

So we know where all this data is, so we can now start to write some code to automate it.

Automating the Data Scraping

Let me start this section by saying an important rule for data scraping: When you start making lots of requests to a public facing site to collect your data, do so ethically and respectfully. Remember that hosting this data costs money, and having some nerd come along and collect all this data without paying or at least seeing an ad isn’t what they had in mind when they chose to make all of this data publicly available. The way I would approach this rule, is by doing the following:

-

Put a small time delay between each request so as to not make them too frequent which could overload their servers. This applies to API requests as well as html page requests, but you may find that with public APIs there is a usage limit already in place, so you’ll be forced to slow down anyway.

-

Store the data locally once you have collected it. This may seem obvious, but the part of your program that analyses the newly scraped data can do so using a local copy while you’re writing that part. You don’t need to interact with the site each time during development.

-

Edit your User-Agent in the request to prevent looking like a bot. If you don’t want to be blocked by some automatic firewall or DDOS protection service, pretend you’re an iPhone user or something. Wait a minute, this one doesn’t sound ethical at all… moving on.

We’re quite fortunate in that the Algolia request returns a large number of courses at a time, as well as most of the fields we want. There’s a limit to how many courses are returned on one “page” though, so we’ll need to just loop through all of these pages. I was lazy and just looked manually to see the total number of pages when returning 1000 courses, but the proper way would be to check if the number of courses returned on a given page is less than 1000, indicating it is the last page:

# Loop through each of the pages returned for the all products request

for i in range(0, product_pages + 1):

# Request data from algolia for current page

with urllib.request.urlopen(f'{algolia_url}{i}') as url:

print(f"Fetching coursera program data on page {i}.")

page_data = json.loads(url.read().decode())

# Save page data to local json file.

with open(f'all-products-{i}.json', 'w') as outfile:

json.dump(page_data, outfile)

# Merge all products data into single list.

all_products_list = all_products_list + page_data['hits']

# Wait before scraping next data

time.sleep(wait_length)

# Convert raw products json data into dataframe

all_products_df = pd.DataFrame.from_dict(all_products_list)

The raw data returned from this request requires a bit of cleaning to move onto the next stage. We want a field with the course ID and a separate list of all of the specializations the courses belong to. There are already fields that distinguish specializations from courses, and the objectID field for both is prefixed with either “course~” or “s12n~” which we’ll want to remove for later.

This is also where we create our first pandas dataframe which will be the main datatype used later for analysis. When scraping and looping through the data I’m used to using key-value like data types which is what we’ll continue using for now.

# Group Courses, and clean data before creating dict

courses_df = all_products_df.loc[all_products_df['entityType'] == 'COURSE'].reset_index(drop=True)

courses_df['id'] = courses_df.apply(lambda row: row['objectID'].replace('course~',''), axis=1)

courses_df = courses_df.set_index('id')

courses = courses_df.to_dict('index')

# Group Specializations, and clean data before creating dict

specializations_df = all_products_df.loc[all_products_df['entityType'] == 'SPECIALIZATION'].reset_index(drop=True)

specializations_df['id'] = specializations_df.apply(lambda row: row['objectID'].replace('s12n~',''), axis=1)

specializations_df = specializations_df.set_index('id')

specializations = specializations_df.to_dict('index')

We now have a list of all courses and specializations, but we don’t know what courses belong to what specialization yet. To get this, we need to use the Coursera API we found and look at each specialization at a time, as this will list all of the courses included. When we loop through each specialization we will edit both courses and specialization dicts we made in the previous step to add the associated specialization and course id respectively.

# Loop through all specializations to collect their courses.

for index, spec_id in enumerate(list(specializations.keys())[:len(specializations.keys())]):

# Get specialization URL.

specs[spec_id]['courses'] = []

spec_row = specs[spec_id]

slug = spec_row['objectUrl'].replace("/specializations/", "")

spec_url = f"https://www.coursera.org/api/onDemandSpecializations.v1?q=slug&slug={slug}&fields=courseIds,id"

# Make a request to that URL.

with urllib.request.urlopen(spec_url) as url:

# Parse the JSON response.

spec_data = json.loads(url.read().decode())

course_ids = spec_data['elements'][0]['courseIds']

# Loop through each course.

for course_id in course_ids:

# Check that we have a record of this course already from Algolia .

if course_id in courses:

# Initialize specs array for course if required.

if 'specializations' not in courses[course_id].keys():

courses[course_id]['specializations'] = []

# Add Specialization to Course, and vice versa.

if spec_id not in courses[course_id]['specializations']:

courses[course_id]['specializations'].append(spec_id)

if course_id not in specs[spec_id]['courses']:

specs[spec_id]['courses'].append(course_id)

# Wait before scraping next data.

time.sleep(wait_length)

# Convert back to DF and save to local JSON.

specs_df = pd.DataFrame.from_dict(specs, orient='index')

specs_df.to_json('specializations.json')

To improve readability I removed some print statements I used to log the progress of this query while it is running. The query can take a little while to request all 400+ specializations especially when we are limiting how many requests we make to ease the load on their servers (and to avoid being blocked).

The last piece of the data scraping puzzle is to obtain the pricing data for the courses, as not all courses are priced equally. A Masters in Data Science from Imperial might be the best course available, but I’d maybe want to start somewhere £15,000 cheaper.

# Pricing Data for courses

for index, course_id in enumerate(list(courses.keys())[:len(courses.keys())]):

# If there is no price data, it is free. I think.

courses[course_id]['price'] = 0

price_url = f"https://www.coursera.org/api/productPrices.v3/VerifiedCertificate~{course_id}~GBP~GB"

# Request price data from URL. Update price data in course dict.

try:

with urllib.request.urlopen(price_url) as url:

price_data = json.loads(url.read().decode())

courses[course_id]['price'] = price_data['elements'][0]['amount']

except:

# Log error

# Wait before scraping next data

time.sleep(wait_length)

# Convert back to DF and save to JSON for running locally.

courses_df = pd.DataFrame.from_dict(courses, orient='index')

courses_df.to_json('courses.json')

The local JSON files we’ve created can now be used if we want to skip to the analysis part going forward. When we want the most up to date courses and specializations we can query the APIs again, but they shouldn’t change too much very often.

A Little Bit Of Data Prep

We have all of our courses and all of our specializations, but at the moment this data is separate, and could do with some cleaning as well. We’ll want to create some dataframes that properly links this data to fill in all of the gaps before we start the analysis.

# Add some fields for later use

specs_df['partners_str'] = specs_df.apply(lambda x : 'Offered by ' + ' & '.join(x['partners']),axis=1)

specs_df['specialization'] = specs_df['name'] + '\n' + specs_df['partners_str']

# Expand the lists we want to aggregate in the specializations table

specs_df['courses'] = specs_df['courses'].apply(lambda d: d if isinstance(d, list) else [])

specs_with_expanded_courses_df = expand_list(specs_df, 'courses', 'course_id')

specs_with_expanded_partners_df = expand_list(specs_df, 'partners', 'partner_name')

# Join to the courses dataframe for additional metrics and clean columns names.

merged_specs_df = pd.merge(specs_with_expanded_courses_df, courses_df, left_on='course_id', right_index=True)

aggd_specs_df = merged_specs_df.groupby('specialization', as_index=False).sum()[['specialization','avgLearningHours_y','price']]

aggd_specs_df.rename(columns={'avgLearningHours_y': 'avgLearningHours', 'avgLearningHours_y': 'avgLearningHours'}, inplace=True)

We’ve created some new fields that look prettier and only included the fields which are of interest to us, so we can now proceed with the analysis.

Let’s Answer Some Questions

To grow our understanding of the data, let’s set out to answer some questions which gradually dive deeper into the data as we learn more about it:

What are some basic stats for enrolments, average learning hours, average rating, and price for all specializations?

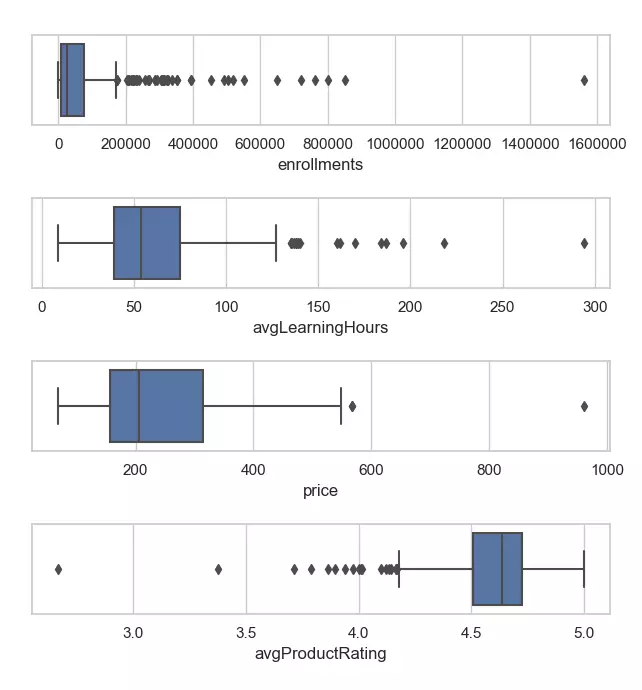

To quickly see some basic figures on these fields we can use the describe function on the data which outputs the count/mean/min/max/median type variables. This only generates a dataframe with these values though, so it’s better to look to seaborn to visualise it with a box plot:

fig, axes = plt.subplots(4, 1)

sns.boxplot(x='enrolments', data=specs_df, ax=axes[0])

sns.boxplot(x='avgLearningHours', data=aggd_specs_df, ax=axes[1])

sns.boxplot(x='price', data=aggd_specs_df, ax=axes[2])

sns.boxplot(x='avgProductRating', data=specs_df, ax=axes[3])

There’s a huge jump from the maximum enrolments compared to the average, and even the 75% quartile. This tells us that there’s a huge amount of specializations on Coursera with not that many enrolments, and a handful of very popular course. Everything else looks as expected, and it’s good to see what I can expect for average learning hours and cost. Something missing from the box plots which is included in the describe output is the count of specializations, which there are 436 of, so a lot to choose from!

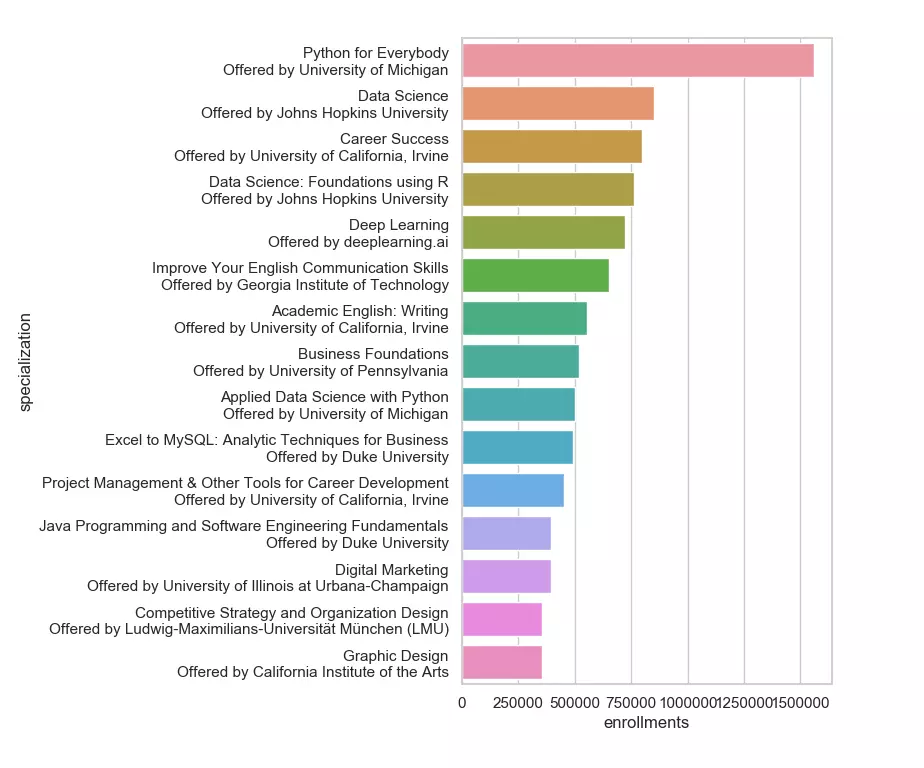

What are the most popular specializations?

Those outliers in the enrolments box plot caught my attention, so we can use a bar chart to investigate what they are:

top_specs_enrolments = aggd_specs_df.nlargest(15,'enrolments')

sns.barplot(x="enrolments", y="specialization", data=top_specs_enrolments)

Coursera started with machine learning courses initially, so it’s no surprise to see the most popular courses are in this category. I’m a little annoyed that none of the scraped data actually includes a “category” field as that would be quite interesting to delve into.

Conclusion

I’m writing this conclusion almost two years after I started writing this post, so I suppose I should give a short update explaining why that is and what has happened in that time. Long story short, a lot of significant and unpredictable life events happened which completely derailed me, and that’s not even including the global pandemic that also took place. Ultimately these obstacles I had to overcome helped me grow as a person, and learn to appreciate everything that I was taking for granted, such as my friends, family, relationships, and even time itself. So rather than continue putting off the things I want to do or work-towards, I’m now actively doing those things so that I won’t feel regret later on for having never achieved them, or at least trying to. And yes, that includes finishing a little blog post about web scraping.

If this entire post wasn’t a big enough clue, I’m quite interested in data science/engineering. Back when I was doing the analysis on the courses available I was quite pleased to see that the top courses offered on this site are based on these very subjects. If I had spent more time looking into ways on how to rank a good course, I may have singled out a few to choose from, but I eventually ended up going with a course which focused on tools I had been using at work which I wanted to learn more about.

The course I ended up choosing was the Preparing for Google Cloud Certification: Cloud Data Engineer Professional Certificate specialization, which is created by Google and covers many different areas of data engineering within GCP. As it is a specialization made up of six courses, there were some inconsistencies with the quality of the lessons, with some that were simply copy and pasting code which isn’t ideal. I would still recommend it however if you’re interested in Data Engineering, but I feel like there’s still much to learn. More content for another blog post in 2023 perhaps.