Have you ever accidentally deleted an entire database worth of data? This was precisely the nightmare I found myself in recently with my personal budget app after trying to tidy up some old transactions. Fortunately I found an old backup file, but it was over 18 months old which seemed far too big of a gap of data for me to accept. Thus began a journey full of highs and lows to rebuild the missing information using other data sources and digging into the source code of the app itself, which I will describe below.

The Backup File Mystery

The budgeting app I am using is called Monefy for Android, and while it does support exporting data to an easily readable CSV, data can only be “restored” from a bespoke backup file. If I was to restore my missing data then I’d need to understand what this backup file was. This file has no type, and opening it in something like notepad revealed no clues as to what it was. In order to unlock the secrets of this file I’d need to learn how it is created, which required inspecting the app code itself.

The file format used for installing and packaging apps on Android is called an APK (Android Package Kit). You can find APKs online, and also tools which can decompile them to let you review the code hidden within. Most of the time it’s a mess to navigate through (at least in my experience) but you can sometimes stumble across something insightful which I fortunately did with the Monefy APK.

It seemed as if all logic related to the backup and restore process was found in a DatabaseBackupHelper.java file. Within this file I learned that the backup file was just an encrypted SQLite database, and the key for encrypting it was miraculously stored in the file too.

public class DatabaseBackupHelper {

public static final String BACKUP_KEY = "MyDifficultPassw"; // <- This was seriously the encryption password.

...

While you can decompile an APK file to see the app source code, you can’t just change a few lines and recompile it into a different app (at least not easily). The challenge now was to find a way to execute this logic myself and use it to create and parse my own backup files.

The Return to Java

Within the file contained standard Java encryption and decryption methods, so I looked up a simple example from CodeJava as a starting point:

import jbpackage.CryptoUtils;

import java.io.File;

/**

* A tester for the CryptoUtils class.

* @author www.codejava.net

*

*/

public class CryptoUtilsTest {

public static void main(String[] args) {

String key = "MyDifficultPassw";

File inputFile = new File("monefy_backup");

File decryptedFile = new File("monefy_backup_decrypted.db");

// I also tested encrypting

//File inputFile = new File("monefy_backup_new.db");

//File encryptedFile = new File("monefy_backup_encrypted");

try {

CryptoUtils.decrypt(key, inputFile, decryptedFile);

//CryptoUtils.encrypt(key, inputFile, encryptedFile);

} catch (Exception ex) {

System.out.println(ex.getMessage());

ex.printStackTrace();

}

}

}

I hadn’t written any Java in a while (it was the first programming language I learned though) so most of the time during this stage was relearning how to run Java. Once I eventually figured that out I was able to successfully run the above logic. I tested the validity of the decrypted SQLite database file using an online viewer and could see all of the data, it was beautiful. All of my transactions were visible in the database, a moment of true eureka. It wasn’t the deleted data though, but I was making great progress with understanding how the backup file worked, which I knew I’d need to recreate with records that I added myself.

The Switch to Python

Rather than continuing to battle with Java I decided to transition to a language I was more comfortable using for the decryption process: Python. This task proved more difficult than expected due to the differences in the encryption libraries used by both. After some investigation, I found that the javax.crypto.Cipher module defaults to the less secure “ECB” mode when using the “AES” algorithm.

For those unfamiliar with these “modes”, here’s how ChatGPT explains it:

In encryption, the mode determines how to repeatedly apply a cipher’s single-block operation to securely transform amounts of data larger than a block. ECB, which stands for Electronic Codebook, is considered less secure because it encrypts identical plaintext blocks into identical ciphertext blocks, thus not hiding data patterns well. This is why other modes like CBC (Cipher Block Chaining) or GCM (Galois/Counter Mode) are often recommended as they offer better security properties.

Equipped with this knowledge, I was able to use the correct mode that allowed my Python encrypted database files to be accepted by the app. Below shows the final version of this logic which went through a few revisions as I was working on this issue:

from Crypto.Cipher import AES

class MonefyCrypto:

__key = 'MyDifficultPassw'

@staticmethod

def __process_file(input_file_path, output_file_path, operation):

try:

cipher = AES.new(MonefyCrypto.__key.encode(), AES.MODE_ECB)

with open(input_file_path, 'rb') as input_file:

input_bytes = input_file.read()

if operation == 'encrypt':

output_bytes = cipher.encrypt(input_bytes)

elif operation == 'decrypt':

output_bytes = cipher.decrypt(input_bytes)

else:

raise ValueError(f"Unsupported operation: {operation}")

with open(output_file_path, 'wb') as output_file:

output_file.write(output_bytes)

except Exception as ex:

raise Exception(f"Error {operation}ing file", ex)

@staticmethod

def encrypt_file(input_file_path, output_file_path):

MonefyCrypto.__process_file(input_file_path, output_file_path, 'encrypt')

@staticmethod

def decrypt_file(input_file_path, output_file_path):

MonefyCrypto.__process_file(input_file_path, output_file_path, 'decrypt')

With everything relating to the creation of the encrypted backup file working in Python, the next task would be the biggest of this entire endeavour: recreating 18 months worth of missing transaction data.

The Biggest Prompt I’ve Written

The primary considerations during this process were to maintain the existing tables in the database by only adding new transactions from existing categories. I didn’t want to risk corrupting anything by making changes which the database wasn’t able to link with other tables (a precursor of things to come).

Utilizing a detailed prompt with GPT-4 and thorough explanation of how the fields in the exported CSV should map to the table columns, I was able to generate code that was 90% of what I wanted. The majority of modifications involved adjustments to the logic around the Unix timestamp to ensure it perfectly matched the format shown in the table. This is generally my experience with generative AI so far, it gets you most of the way there but you have to finish it yourself. Here is that detailed prompt:

Write some logic in python that will read transactions data in the format of the following CSV file:

'''

date,account,category,amount,currency,converted amount,currency,description

01/11/2017,Cash,Hobbies ,-4.99,GBP,-4.99,GBP,"Camera Lens"

'''

It must then upload this data to a sqlite table called transactions which has data in the following schema:

'''

account_id VARCHAR

amount VARCHAR

amountCents BIGINT

category_id VARCHAR

createdOn BIGINT

note VARCHAR

scheduleId VARCHAR

deletedOn BIGINT

_id VARCHAR

localHashCode INTEGER

remoteHashCode INTEGER

hashCode INTEGER

'''

Here is some example data from the sqlite transactions table represented as a CSV file:

'''

account_id,amount,amountCents,category_id,createdOn,note,scheduleId,deletedOn,_id,localHashCode,remoteHashCode,hashCode

10000000-0000-0000-0000-000000000001,0,5500,10000000-0000-0000-0000-000000000001,1510231796154,Subway lunch,NULL,NULL,cca202e6-0fd7-4156-9fe1-1b8c8a75d7f0,408580565,408580565,0

10000000-0000-0000-0000-000000000001,0,800,10000000-0000-0000-0000-000000000001,1510311184056,Pastry ,NULL,NULL,e5ef29d1-92af-4e53-b174-118c7982bad2,1893744997,1893744997,0

10000000-0000-0000-0000-000000000001,0,12000,995048dd-279c-451f-97bc-bf187fae5747,1512552805176,Disaster Artist,NULL,NULL,922cb861-b03f-42d6-b9fe-76d8df620032,321950014,321950014,0

'''

Here are instructions on how to generate the contents of each column row in the sqllite table based on the CSV data:

account_id: This value would be found by looking for the 'account' value from the CSV in the 'title'

column from a sqlite table called 'accounts'. The account_id is present in the '_id' column within this accounts table.

amount: This can just be '0'.

amountCents: This is converting the 'amount' value from the CSV into a positive integer format by multiplying by 1000.

category_id: This value would be found by looking for the 'category' value from the CSV in the 'title' column from a sqlite table called 'categories'. The category_id is present in the '_id' column within this categories table.

createdOn: This is the unix timestamp value for the 'date' value in the CSV. To avoid any daylight sayings issues, convert the date from the CSV into the unix timestamp equivalent for occurring at 10:00 for all records.

note: This is the 'description' value from the CSV.

scheduleId: This will always be NULL.

deletedOn: This will always be NULL.

_id: This must be a unique GUID value that is generated for each row of the data.

localHashCode: This must be a random integer value either positive or negative.

remoteHashCode: This must be the same as the localHashCode value.

hashCode: This must always be 0.

The resulting code made it possible to use a CSV in the format of the app exported files (the readble CSVs not the backup files), and convert it into a database file that I could encrypt. Up until this point I had been flying through the tasks with immense good fortune, but all of that was about to change.

The Lows of Database Corruption

It became quite apparent almost immediately how long of a task recreating 18 months worth of transactions was going to take me. While I exported what I could from various other data sources like my bank, Splitwise, Amazon purchases, etc. the time it would take to list these all in the CSV for reupload would take a while. I was planning to focus on one month of data each evening until it was complete, and was happy with that target.

While I could live with the tediousness of the data input, what I could not deal with so well was the app failing to accept the newly created backup files for no reason. If I tried to add the full months worth of data in one go I would see certain days with corrupted data in it appearing as blank values on the app. If I added that particular day on its own, there would be no error, or uploading the data in smaller batches. So uploading the data in smaller batches was what I did for a while until that stopped working too.

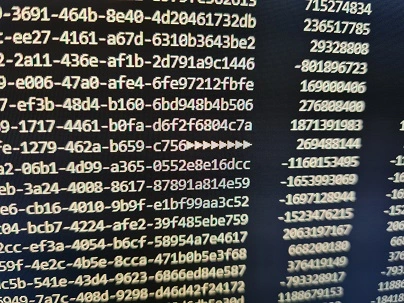

I spent a while trying to understand the cause of this error, and the closest I came was seeing this in the database:

Looking at it now as I write this is giving me flashbacks to the frustrations I was feeling when trying to understand what on earth these arrow characters were and why they had appeared. There was nothing different whatsoever about the particular record that was seeing these, and tweaking the values as a form of trial and error wasn’t revealing any clues. Each new attempt to resolve the issue I had to encrypt a new file, upload it to Google Drive, and try to restore from it from my phone, all quite tedious. I was approaching my breaking point but did my best to persevere.

The Unexpected Rescue

In one of my many attempts to understand the corruption issue, I tested a database that had duplicate rows to see what would happen. When I tried restoring from this version, something different happened: an error message appeared in the app. This was interesting, and what was even more interesting was that it had an option to “Restore to a previously saved version”, which I accepted. By some miracle, it recovered everything, all of the data I lost, all 18 months worth. I was elated.

The only explanation I can think of for this is that the “saved version” was kept somewhere hidden where I hadn’t looked yet (trust me, I looked everywhere for the backup files). Perhaps buried deep in my phones system files, or in a hidden folder on my Google Drive, who knows? I sat there and reflected on the significance of this moment, as if it were a cosmic sign that my determination to continue with the issue has been rewarded somehow.

The Key Takeaways

With all of the work I had been doing working with SQlite and the database files I was able to make the change I originally wanted to make before accidentally deleting the database. I was able to make edits to old records in bulk using SQL commands that I’d learned along the way, which I’ve included in the GitHub repo here. Most importantly I’ve started backing up my transactions data frequently, and upload them into BigQuery as well which I’ll cover in another blog post one day.

The biggest takeaway of this process was without a doubt what the the database corruption issues taught me which was to never give up, even when all hope seems lost.